Around two years ago, I had a realisation. I had been working in the field of technology-driven innovation for a few years, and what became increasingly apparent was the potential for emerging technologies to improve our lives. However, alongside that was another, starker, realisation that new tech could also exacerbate unfair societal dynamics – such as inequality and discrimination – and our physical and mental health vulnerabilities.

When I had this realisation, I wanted to help organisations innovate responsibly. I wanted them to have an ethical framework in place to ensure tech was always used for good, not bad.

In my role, I spend a lot of time collaborating with the broader ecosystem – including academia and no-profit think-tanks – to drive research in this rather uncharted field. I also work closely with my colleagues to develop approaches that operationalise and make ethics real, with the ultimate goal to help our clients embed ethical practices throughout their data and AI lifecycle.

While the implementation of tech may have a bad reputation among the public, it is important to say that most people working in the tech space do so with the intention to make a positive impact on the world and drive positive change. Sometimes that’s not enough, though. With investment in AI growing strongly in all regions of the world, and with many governments showing an appetite to be first in class, the adoption of an ethical principle framework is critical.

Without an ethical framework, AI can be used for bad. You’ve only got to look at recent cases in the U.S. where algorithms are exacerbating racial bias, or read reports of big tech companies using CV modeling to discriminate against female applicants for jobs purely on the basis of gender, to know that AI poses a threat to human rights. On top of that, it can threaten our democracy through the sharing of fake news and creation of fake accounts or fake personas throughout election campaigns.

So part of my role is banging the drum for the benefits of ethics in data, AI and other technologies. If it isn’t seen by the public as ethical, you will then struggle to gain public confidence in the digitisation of public services.

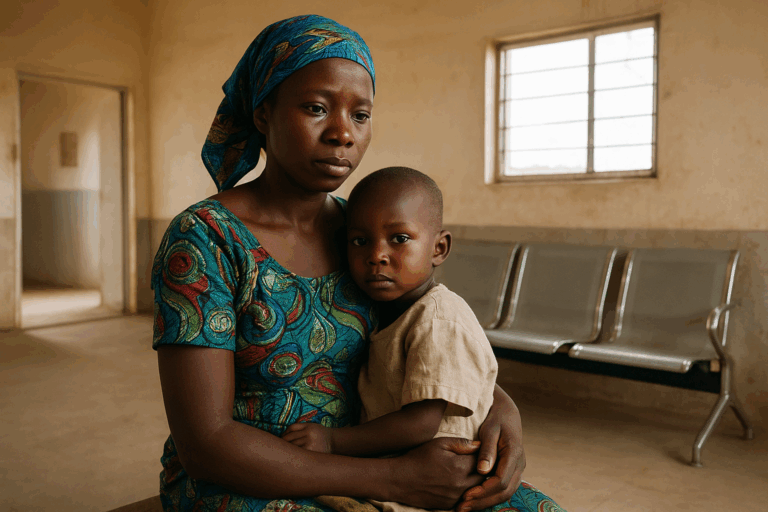

It’s therefore important to ensure that the public is aware that the deployment of these technologies can work for great social good. It can drive greater transparency and meritocracy, lowering barriers to access quality services. An example of that is in the health space, where we now have apps that can run blood tests with high levels of accuracy at almost zero cost. Tech can also be used as a source for good in poorer countries where technologies help with fundamental challenges such as cleanliness and scarcity of water, or access to quality education.

AI and data can hold a mirror up to society, and help us to understand hidden and unconscious bias. If we are willing to embrace ethically-driven technologies, we have a unique opportunity to transform our communities and how we live for the better.

It is crucial to involve these communities in designing technology-led systems. But in order to have a meaningful and efficient public engagement on the design and adoption of AI to solve specific problems, we need to invest in digital and data literacy. This is vital to future-proof our societies and ensure people are prepared for the next waves of innovation and disruption. So, I see ethics as an accelerator to the adoption of effective data literacy programmes.

With investment in AI growing strongly in all regions of the world, the adoption of an ethical principle framework is critical

However, trying to ensure this framework is adopted is also very challenging. For a start, cultural diversity makes it difficult for a global standard for ethics in AI. The bar for what good looks like and what bad looks like can depend on social norms and cultural values, which vary from country to country.

The Global Partnership On AI will hopefully go some way to recognising that problem. Launched in June this year by the governments of Australia, Canada, France, Germany, India, Italy, Japan, Mexico, New Zealand, the Republic of Korea, Singapore, Slovenia, the UK, the USA, and the European Union, it has a mission statement to: “Support the responsible and human-centric development and use of AI in a manner consistent with human rights, fundamental freedoms, and our shared democratic values”.

It brings together experts from academia, industry, and governments, and it shows there is a fundamental understanding that ethics has to be at the forefront of AI in order to build public trust and get the most societal change for good out of it.

It’s not perfect, though. It needs more collaboration from developing and poorer countries. And it needs to address the issue that what is classified as ethical today may not be deemed ethical in the future.

In the wrong hands, technological change and innovation can increase inequality across societies. It’s critical, therefore, that while AI and the use of data is still at a relatively early stage, that ethical frameworks are implemented. There is a lot of work still to be done, and a long way to go, but governments have shown they are willing to lead on it – and that’s the first step on the road to using technology to create a fairer, more equal society.

Rossana Bianchi

Rossana Bianchi is a consultant in AI and Data Ethics Capability, working with some of the world’s biggest brands, as well as academia and policy makers.