Artificial intelligence (AI) isn’t just the result of technological progress. It is also a representation of our societal values, our ethical standards, and our collective intelligence. As the technology is adopted acro ss a wide pool of industries and reshapes how they operate, its important to take stock of the impact AI will also have on society.

Although AI can bring productivity benefits, models are often trained on vast quantities of public data – the risk of this is strengthening already existing biases present in training data. As such, it’s imperative for developers of frontier models to ensure they create inclusive and ethical AI.

To do this however, there isn’t an ethical guide available for developers to follow, meaning attempts to eliminate biases don’t always work. Google’s Gemini was widely criticised for generating illogical outputs as a direct result of teams attempting to eliminate biases in training data. Yet, this is only one example of a far wider issue – there is no consensus over what ethical and inclusive AI should look like, let alone how to achieve it.

A good starting point here is to consider different points of view and experiences during AI development. This can result in better development outcomes, particularly for those with neurodiversity, and something group organisations looking to better develop their AI solutions must think about. Only 30.6% of people with autism in the UK are employed, but, on average, these employees are 30% more productive meaning they could being valuable skills to the challenge of ensuring AI applications are as inclusive as possible.

How neurodiverse people are uniquely suited for AI development

There are significant areas within AI development that neurodiverse people are uniquely suited for. Some have enhanced pattern recognition capabilities and an exceptional attention to detail, which uniquely lends them to developer work. An example of this is Data Cleaning, where incorrect, incompatible, corrupted, or duplicated data needs to be scrubbed from training datasets.Neurodiverse people that can spot subtle patterns that indicate erroneous data are integral for data labelling processes that promote accurate performance.

However, the contribution of neurodiverse people to AI development is not just limited to data related work. It’s estimated that 20-50% of those working in the UK’s creative industries are neurodiverse. Creative problem solving is an imperative skill for developing sophisticated algorithms that can recognise complex patterns or designing models that must adapt to situations for which they have not been trained. Neurodiversity sometimes brings the ability to think outside the box, making neurodiverse people particularly helpful for these kinds of tasks.

More diverse perspectives foster more inclusive AI

The unique perspectives and lived experiences of neurominorities are necessary considerations to ensure the widest group of people possible can benefit from AI systems. Neurodivergent people can provide insightful feedback on the accessibility of AI tools in addition to contributing to their development. From this, developers can then tune models to be more accessible and intuitive to a diverse range of end users.

That feedback also has the potential to improve the emotional intelligence of AI systems. The reality is, neurodiverse people are often underrepresented in training data – for large language models, for example, often social media data is used. However, neurominorities are far less likely to use social media compared with neurotypical people, leading to an absence of data available to models about the differences neurominorities have in communication styles and preferences. Including neurodiverse people in both AI development and testing rounds is necessary to maximise inclusivity of models.

Using AI to bring about societal change

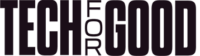

Neurodivergent people make up 15-22% of the world’s population, but under a third of them find employment in the UK. This is partially due to hiring discrimination, but a significant problematic development in recent years has been AI recruitment systems screening out neurodiverse people. The biases which already exist in businesses today – such as the notion that someone who struggles to maintain eye contact is disinterested – have been amplified by AI recruitment systems.

However, its crucial to remember that AI picking up negative stereotypes is also evidence it can pick up positive and accurate representations too. Promoting the employment of neurominorities will ultimately have a positive impact on society – integrating these people into the economy means they can live more fulfilling lives whilst contributing to public services. With the rise of companies using AI in recruitment, it presents an opportunity to circumvent the biases hiring managers have against neurodiverse people. Through training models to not discriminate against common neurodiverse behaviours and habits often holding these people back at the interview stage, its less of a challenge to bring them into the world of work.

Diversity is a productivity enhancer

Teams with neurodivergent professionals in are 30% more productive than those without, and diverse teams which include neurodivergent professionals have boosted team morale. Some companies are already aware of the benefits hiring neurodiverse people can bring for both overall productivity and neurotypical employees. Microsoft, for example, runs its own neurodiverse hiring program, and JP Morgan, through its ‘Autism at Work’ programme, found that for certain technical positions neurodiverse people can be 90-140% more productive than employees that had been working for ten years.

The unique skills and perspectives neurodiverse people bring can also stimulate innovation and creativity within teams. Integrating neurodivergence doesn’t just mean reinforcing inclusivity – it means reinforcing productivity.

AI needs neurodiverse input

As AI systems become more and more present in our everyday lives, its important to take stock of the opportunities the technology offers for neurodiverse people. We should also recognise how much development of the technology depends on including neurominorities. If we are to ever reach the point of artificial general intelligence, with systems capable of matching or surpassing human cognition, that includes neurodiverse cognition too.

Matthieu Leroux

Matthieu Leroux is Regional Vice President at UiPath.